xGCN: An Extreme Graph Convolutional Network for Large-scale Social Link Prediction

Abstract:

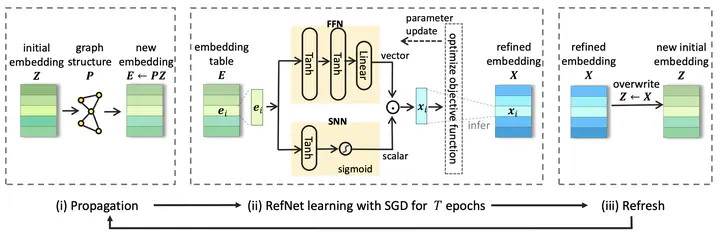

Graph neural networks (GNNs) have seen widespread usage across multiple real-world applications, yet in transductive learning, they still face challenges in accuracy, efficiency, and scalability, due to the extensive number of trainable parameters in the embedding table and the paradigm of stacking neighborhood aggregations. This paper presents a novel model called xGCN for large-scale network embedding, which is a practical solution for link predictions. xGCN addresses these issues by encoding graph-structure data in an extreme convolutional manner, and has the potential to push the performance of network embedding-based link predictions to a new record. Specifically, instead of assigning each node with a directly learnable embedding vector, xGCN regards node embeddings as static features. It uses a propagation operation to smooth node embeddings and relies on a Refinement neural Network (RefNet) to transform the coarse embeddings derived from the unsupervised propagation into new ones that optimize a training objective. The output of RefNet, which are well-refined embeddings, will replace the original node embeddings. This process is repeated iteratively until the model converges to a satisfying status. Experiments on three social network datasets with link prediction tasks show that xGCN not only achieves the best accuracy compared with a series of competitive baselines but also is highly efficient and scalable.